February 3, 2012 | Software Consultancy

For business people, the idea offers potential for much greater control over the end result of whatever project they are engaged in without relying entirely on testers finding every flaw. By the same token, a clear acceptance test makes misunderstandings of specification much less likely.A well implemented set of acceptance tests offers:

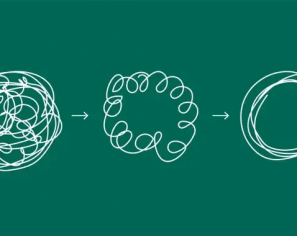

Whilst the concept is great, the practical implementation of BDD is often plagued with problems. In this post I’d like to address some of the major blocks I’ve hit in the past, and where possible describe the moments of realisation which led to an improvement in the quality of my acceptance tests. These lessons relate largely to the problems of linking the tests to automation.

Before we get down to details, there is one point that needs to be made. BDD is not a solution which is suitable for every circumstance, something not always made clear in the documentation of the various tools which support the approach. If your project does not have regular input from a business person, most of the value of writing tests in a format that non technical personnel can easily comprehend is lost. Make no mistake, even the best implementation of this approach carries with it additional workload and maintenance over a more traditional testing approach.

There are dozens of tools which can be used to create BDD based automated acceptance test frameworks.

Each of them has different strengths and weaknesses, as well as slightly different approaches. It’s important to know at least enough about each of them to decide which would be best suited for your project, and this is something that I’ll be expanding on in a later blog post.

The most common way of writing BDD acceptance tests remains very close to that originally described by Dan North when introducing the idea:

Acceptance tests consist of three groups of distinct steps, “Given”, “When” and “Then”. For anyone with a testing background these are easily understood as precondition, action and expected output.

This format is used by several tools, such as RSpec, Cucumber, and FitNesse (with the GivWenZen plugin). Cucumber is arguably the most widely used with a user base numbering in the hundreds of thousands.

For any test, it is important to know the context. Your narrative (or value statement) should tell you everything you need to know about the role of the primary actor in the test(s), the overall reason for the functionality and the specific behaviour being added to the system. This is normally expressed in a format along the lines of “As a <actor>”, “In order to <goal>”, “I want to <behaviour>”.

When writing a narrative for a user interface, it is especially important to ensure that your actor is properly defined. Your description should include all unique properties of the actor that result in them encountering the described behaviour.

Getting to the right level of abstraction in your tests can be a difficult prospect, and is largely dependant upon the specifics of the system under test. There are however a few key points to keep in mind.

Wherever possible, you should try to keep specific test data out of your test.

For example, if you are testing a user interface for a standard web system, you can remove all test data by writing your steps from the user point of view and creating personas for the various actors in the system.

Some of the BDD tools available also offer other approaches, such as Cucumber’s example tables, which allow you to keep the test data separate from the intent of the test.

Another thing which can make a huge difference to the maintainability of your test is to describe interaction at the level of intent rather than describing particular actions. If writing a test intended to ensure that a user can access the system, for example, write the steps at the level of intent (“I log in with my credentials”), rather than specific interactions (“I enter my username”, “I enter my password”, “I click submit”). While it seems unnecessary in the simple case, on larger scale an approach like this will make your acceptance tests far more versatile. If the method of logging in should change (say now an email address is used rather than username), the underlying automation framework can be updated and these acceptance tests remain valid. As a cautionary note, you should always be sure that you do not abstract to the point where the intent of the test is lost.

In my opinion, one of the greatest weaknesses of most approaches to BDD is the linking mechanism between the human readable aspect and the code fixtures. The most common method (used by Rspec, Cucumber, JBehave, SpecFlow, FitNesse [GivWenZen] etc) is a regular expression match between a sentence and annotated code.

This means that in order to avoid unnecessary fixtures and repeated code, you need to be using the same phrasing/sentence structure in every test.

On small scale implementations with one or two test automation personnel, this is a limited problem. It is easy to manually check for existing statements with a similar intent (especially if you are grouping the statements logically, e.g. by functional area or page) and change your test to match. It is also easy to develop a pattern to the way in which you write tests to avoid the problem entirely.

As the scope, code-base and number of people involved grows, so does the pain. Without some way of ensuring a consistent approach to the writing of tests, your underlying test framework becomes a mess of fixtures with slightly different names but the same intent.

The best way I have found to get around this when working on a larger scale agile project is a communal dictionary of terms. In essence, this is nothing more than a list of test statements (e.g. “I have logged in”) used in the acceptance tests written to date. This list is shared between everyone writing tests, with the ability to search for an existing term by keyword or add a new term. While this does not completely remove the pain, it at least minimises it’s downstream impact.

The exact method for doing this would vary depending on your toolset, and could take the form of anything from a shared spreadsheet or database through to a web application.

Some tools already have a mechanism for dealing with this, such as SpecFlow’s auto complete. With this, assuming you write the human readable test within the development IDE, existing steps are displayed to the user as they type. There is also something similar being implemented for Cucumber, a more widely usable web based feature file editor.

It should be noted at this point that this is not a problem common to every single BDD tool out there. For example, Concordion takes a different approach in making method calls diirectly from within the feature files, meaning that the sentence structure used is irrelevant as long as the intent is clear.

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.

Agile India 2022 – Systems Thinking for Happy Staff and Elated Customers

Watch Simon Copsey’s talk from the Agile India Conference on “Systems Thinking for Happy Staff and Elated Customers.”

Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup

Watch Simon Copsey’s talk from the Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup on “Seeing Clearly in Complexity” where he explores the Current…

When Your Product Teams Should Aim to be Inefficient – Part 2

Many businesses advocate for efficiency, but this is not always the right goal. In part one of this article, we explored how product teams can…