November 6, 2013 | Software Consultancy

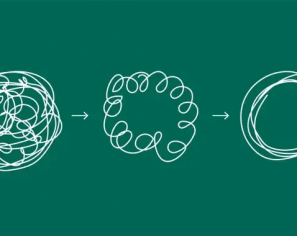

In many organisations, development and test teams have a ready answer for this, and that answer is usually wrong. Commonly, teams use test counts and code coverage statistics, which alone are not enough to validate a test approach and run the risk of giving a false sense of security to stakeholders. In practice, we are not able to fully prove the efficacy of our test strategy until after a release. Once software is in use, new defects highlight where our tests are failing to validate the software and where we need to invest effort to improve coverage. This is where many teams fail to learn and improve.

When we discuss the quality of a testing approach, what we want to know is how confident we can be that a change to the code will result in software that is usable and contains no major problems. This confidence is based on how much we can safely rely on our test pipeline to identify these problems before we release code. Organisations frequently try to quantify this level of confidence or set guidelines intended to improve the quality of tests, often with poor results.

“We have over a thousand tests!”

The least useful metric is number of test cases implemented. Given without context, a high number serves to give a warm, fuzzy feeling to stakeholders – after all, with a thousand test cases covered, we can allow ourselves to believe that the software is well tested.

Any attempt to understand software quality from this number falls at the first hurdle.

We have no way of finding a correlation between system complexity and number of tests required to cover all functionality offered.

Secondly we have no idea (without significant digging) which areas those tests cover and where our coverage is lacking.

The final and arguably most important point is that we have no idea without manual intervention how useful those tests actually are. Often these tests are making unrealistic or nondeterministic assertions, especially when there is pressure to meet an expected test count.

If we accept that a number of tests without any context are of no use to us, the logical next step is to add some context. Code quality tools offer a number of useful metrics for determining the quality of a program, including test coverage as a percentage. This value is often touted as proof of code quality.

While test coverage is an important metric and can tell you a lot about the quality of a codebase, it becomes inaccurate when you attempt to enforce it as a development guideline.

Investigating an existing code base and discovering that it has 5% test coverage tells you that the software has not been written in a test first fashion and there are going to be large areas of code where refactoring is dangerous. The instinctive reaction to this in many organisations is to mandate that code coverage must be 90% (or some other arbitrary value) on newly written software. As with test counts, the main problem here is the assumption that this value has inherent meaning when it does not. We can have tests covering every piece of code, but that tells us nothing about quality.

The problem of poor assertions in tests should be addressed by implementing pair programming and code review within a team, which offer a way for teams to work together to verify that code is properly tested as well as being well written.

However, even if we trust that this process will not be subverted when the team is under pressure, it still doesn’t give us any way to demonstrate the effectiveness of the tests.

A robust approach to testing aims to tell us as much as possible about the quality of our software before it gets released to the end user. This is why we implement unit, integration, functional and performance tests during development. The desire to understand the effectiveness of that testing in advance is understandable and the reason why people try to infer so much from the numbers available before release.

Tracking those metrics can give you a degree of confidence and are not without value (assuming that the tests are sanity checked), but the real proof of our test strategy comes with a release.

Defects captured after release (either to UAT or production environments) tell us whether we have problems with new features or have inadvertently caused regressions in existing functionality. This is where we learn how effective our pre-release testing was.

Defect tracking is often woefully under-managed in agile development teams. If you ensure that all defects are properly logged with the right level of information then you have all the metrics you need to validate and improve your test strategy.

Example:

We make a release to our social media website that introduces the ability to limit posts to specific groups of friends.

New automated tests are implemented that verify the new functionality, and we do a short period of manual exploratory testing.

Happy with the new functionality, we release the new version into our UAT environment where it is used by a small group of beta users.

During this stage, we discover a problem with creating new posts that aren’t limited.

A defect is logged, including test phase (UAT), severity (high), functional area (post creation) and type (regression).

Taken on its own this defect tells us little, but with a larger set of failures we can begin to identify patterns that show us where our tests are insufficient. A number of critical regressions in a particular functional area would clearly highlight a gap in our regression suite, while an unresponsive section of a system might demonstrate a gap in performance testing.

Extracting these metrics is, with most tools, a manual process of reviewing defect lists sorted and grouped by the information above, but putting the time into doing so allows your tests to evolve and improve throughout the development of a project.

Metrics like code coverage can be used to get a sense of your testing strategy, but should not be relied solely upon. Proper defect management and putting time into analysing and understanding defects will allow you to identify areas of weakness and continually improve your test approach.

This will increase confidence in your delivery pipeline and save time at all stages of your development cycle.

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.

Agile India 2022 – Systems Thinking for Happy Staff and Elated Customers

Watch Simon Copsey’s talk from the Agile India Conference on “Systems Thinking for Happy Staff and Elated Customers.”

Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup

Watch Simon Copsey’s talk from the Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup on “Seeing Clearly in Complexity” where he explores the Current…

When Your Product Teams Should Aim to be Inefficient – Part 2

Many businesses advocate for efficiency, but this is not always the right goal. In part one of this article, we explored how product teams can…