July 4, 2013 | Software Consultancy

In which situations Spring Data Hadoop (SDH) can add value, and in which situations would it be a poor choice? This article follows on from an objective summary of the features of SDH.

WRITTEN BY

SDH, following the mantra of all Spring projects, attempts to subsume Hadoop activities into the set of Spring conventions; users of the Hadoop ecosystem will however have distinct expectations.

As the set of Hadoop activities an organisation will engage in is a superset of those supported by SDH, a division running along lines of team responsibility will inevitably occur in all but the most trivial use cases.

Criteria that would suggest a good fit for the use of SDH include:

SDH would be a poor choice of technology if its full feature-set were to be used in an environment where there are dedicated Hadoop operations staff, or where a Hadoop distribution with the full suite of ecosystem tools is deployed.

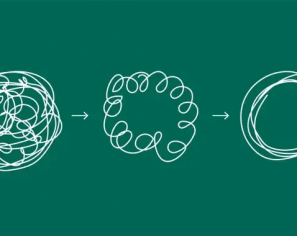

SDH addresses several challenges for which solutions already exist in the Hadoop ecosystem. Moving workflow management out of the realm of dedicated Hadoop solutions and into bespoke Spring applications should be the preserve of those organisations with Hadoop operations small enough to have them under the remit of staff fluent in Spring/Java.

To provide a simile: using the full spectrum of SDH’s job invocation and scheduling would be a little like having a Spring application also rotate its own logs – something normally the responsibility of automation provided by system administrators.

An organisation with its own Hadoop cluster will end up running into one or more compromises where the more flexible option is to forego SDH in favour of dedicated Hadoop solutions.

A central tenet of SDH is to take the operation of Hadoop jobs and ancillary tasks and ground them firmly in the Java/Spring realm. This will be of benefit to Spring developers looking to reduce the cost of learning Hadoop’s operational idiosyncrasies, but potentially at the cost of DevOps engineers having visibility of how jobs are scheduled and configured.

The Hadoop ecosystem changes rapidly and is used in many areas where Spring is not, so it is reasonable to assume that the myriad tools will not coalesce around Spring conventions. It is also true that self-hosting a Hadoop cluster is a non-trivial undertaking, and that the specialist staff required would likely be familiar with dedicated Hadoop solutions to the challenges SDH addresses.

An organisation acting as client to a managed Hadoop cluster would likely benefit from SDH’s scheduling and workflow features. It is not hard to imagine a Spring/Java team with little operational Hadoop experience prototyping against a naive Hadoop installation, or perhaps iterating on non-critical functionality against a cluster maintained by another department. In such an example the mission-critical jobs would be managed by a traditional Hadoop scheduler, and maintained by Hadoop operations sta

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.

Agile India 2022 – Systems Thinking for Happy Staff and Elated Customers

Watch Simon Copsey’s talk from the Agile India Conference on “Systems Thinking for Happy Staff and Elated Customers.”

Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup

Watch Simon Copsey’s talk from the Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup on “Seeing Clearly in Complexity” where he explores the Current…

When Your Product Teams Should Aim to be Inefficient – Part 2

Many businesses advocate for efficiency, but this is not always the right goal. In part one of this article, we explored how product teams can…