June 15, 2017 | Data Engineering

CockroachDB is a distributed SQL (“NewSQL”) database developed by Cockroach Labs and has recently reached a major milestone: the first production-ready, 1.0 release. We at OpenCredo have been following the progress of CockroachDB for a while, and we think it’s a technology of great potential to become the go-to solution for a having a general-purpose database in the cloud.

It has a number of interesting properties:

It felt appropriate to celebrate the 1.0 release by taking a closer look.

CockroachDB is a distributed SQL database, heavily inspired by Google’s Spanner; indeed there are many similarities. In this post, we are going to refer to Spanner and use it as a comparison a few times. We recommend reading our previous post, in which we explored Google Cloud Spanner in more detail.

One crucial difference between them is that you can deploy CockroachDB onto your own hardware; whether it’s on-premise, in the cloud, or on top of a container/scheduler solution.

A very good and detailed design document is available online, explaining CockroachDB’s internal architecture: https://github.com/cockroachdb/cockroach/blob/master/docs/design.md

Some of its most interesting aspects:

Fundamentally, CockroachDB is a transactional database engine on top of a high-performance distributed key-value store. As a result of this design the data is truly partitioned across the nodes of the database cluster and allow for scaling out in order to gain more storage space.

ANSI-SQL is fully supported, including join queries, foreign key constraints and transactions. In addition, there are some custom extensions, such as interleaved tables.

In fact the API is wire-compatible with PostgreSQL, so any client that can connect to Postgres should be able to talk to CockroachDB as well. This includes standard client libraries like JDBC drivers and 3rd party tools like SquirrelSQL too.

ACID transactions are supported, and you can use them in the usual way, via SQL commands like BEGIN or COMMIT. Since the wire compatibility with PostgreSQL, most tools and client libraries will just carry on using it as usual. This is in contrast with Google Spanner in which case a custom client is required and transactions need to be wrapped in executable blocks.

Isolation is the aspect where (as you might expect) Cockroach differs from the traditional databases. There are two levels: SERIALIZABLE and SNAPSHOT.

SERIALIZABLE provides very strong guarantees, no anomalies (as if there was only one transaction operating on the database).SNAPSHOT is more performant, especially in high-contention cases, but allows write skews to happen.ANSI isolation levels are automatically mapped to these; and you can find a more detailed description about them here: https://www.cockroachlabs.com/docs/transactions.html

Cockroach Labs are in touch with Jepsen about testing the correctness of the transaction engine and as of late 2016, CockroachDB passed the Jepsen tests: http://jepsen.io/analyses/cockroachdb-beta-20160829

The usual question for distributed systems is their place in the CAP-triangle. CockroachDB favours Consistency over Availability; making it a CP system. The system remains functional as long as you have a quorum of nodes that are able to access each other.

There is an automatic failover mechanism in case nodes are detected to be unavailable. This might also result in some dropped connections (if a node actually dies) and a temporary increase of client-side latency when a node becomes unavailable. However the system as a whole remains functional.

Like many other modern tools (this approach is particularly popular in Go-based products), CockroachDB ships as a single, self-contained binary file that acts as:

Launching a CockroachDB server is trivial – just need to start this binary via command start.

All configuration is supplied via command-line arguments, there are no config files. At a bare minimum, you need to pay attention to joining up the nodes in order to form a cluster.

This can be achieved by launching a “seed” node:

$ cockroach start --host

And then pointing the rest of the nodes to this seed:

$ cockroach start --host --join

Specifying host is not mandatory, but we recommend doing so to ensure that CockroachDB binds to the appropriate network interfaces and advertises this to its peers.

Note that the seed node is not special in any way – any node part of an existing cluster will do as join target.

Overall, launching CockroachDB and forming a cluster is straightforward.

By default all traffic will be over SSL – the appropriate certificates need to be available. This can be explicitly disabled, however doing so is not recommended in any production setup.

The server process normally stays in the foreground (unless –background is specified). This allows for easy control via systemd or containerised deployments.

Most server configuration happens through command-line flags; there is no configuration file that CockroachDB would read. Some parameters can also be supplied via environment variables (these are always just alternatives to a command-line flag).

There is a few options that can be set. Most of these are straightforward; as a starting point you will probably use “host” to control the network interface bindings and “store” to define where the data files will reside.

CockroachDB has a very flexible tagging mechanism that gives a lot of control over replica placement. Attributes can be defined via the following parameters:

attrs – to specify arbitrary key-value pairs that describe the nodelocality – specifically to describe the location / locality of this node, e.g. datacenter or region specificationsstore – besides specifying the path to the data files, it is also possible to describe some details about the nature of the storage. This is free-form, like the other attributesSpecifying everything as command-line arguments looks simple enough at glance. However if you want to specify a more sophisticated set of attributes, these command-line arguments may quickly become cumbersome to read. For example a more detailed locality configuration will look like this:

--locality=region=eu,datacenter=eu-west-1

And you can end up with storage settings like this one:

--store=path=/var/lib/cockroach/data,size=100GB,attrs=ssd

A very interesting aspect of the design of CockroachDB is that it assumes the possibility of deploying into a containerised / orchestrated environment, such as Kubernetes and this is a first-class deployment model.

While in this current post we focus on the database itself and its more general characteristics, a container-based deployment is definitely of high interest that we want to try in the future. In the meanwhile, we refer to CockroachLabs’ own description of how such a deployment would work.

There are two admin-style tools packaged into CockroachDB – a web interface and a command line tool.

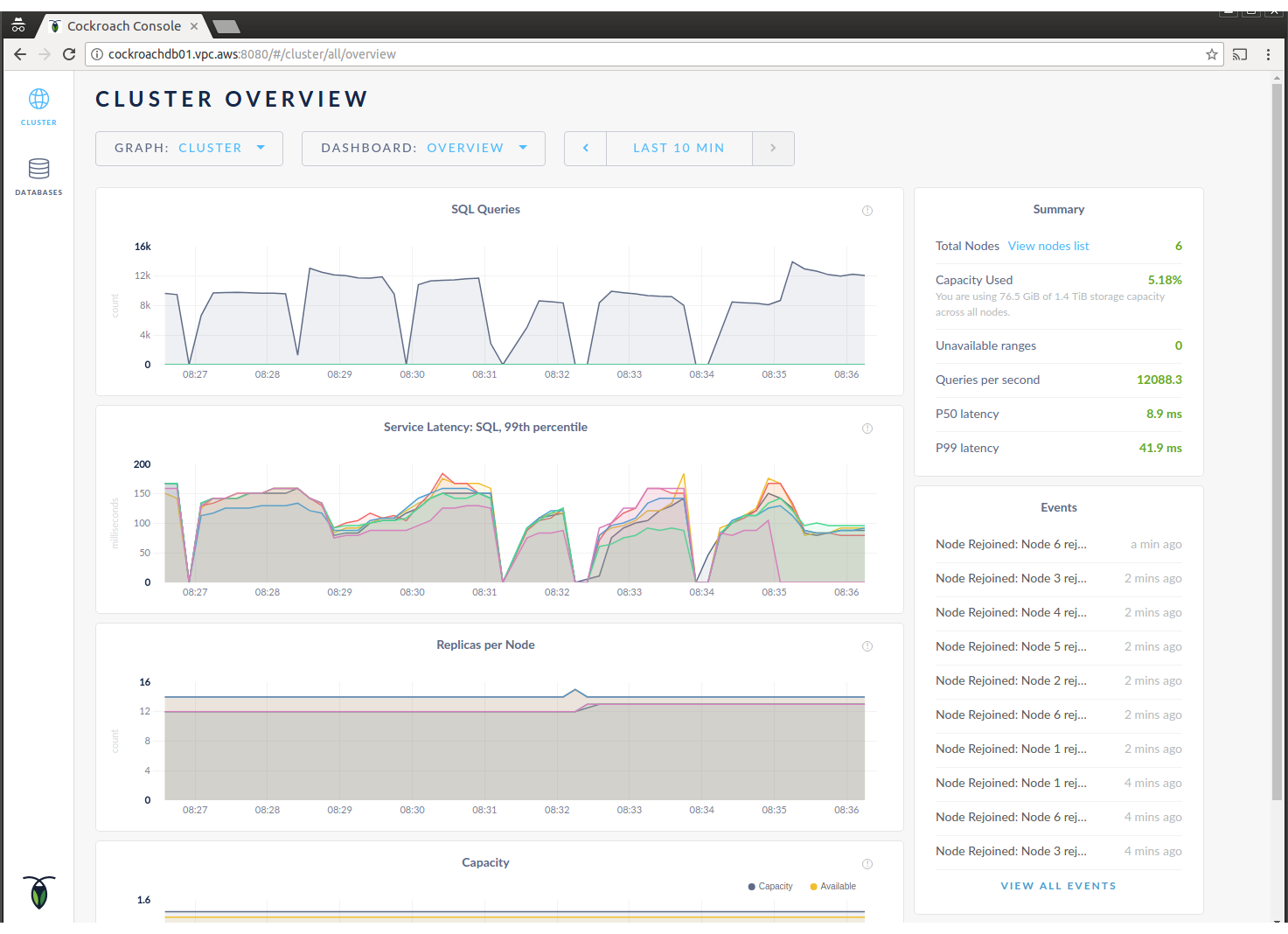

The web interface is a page for monitoring purposes – it displays a number of metrics about the CockroachDB cluster such as list of nodes, data volumes, query latencies, logs, etc… It is available and exposed by default (port 8080) and can’t be disabled. You can access it via any CockroachDB node.

The command-line tool accesses CockroachDB via the data port and allows access to creating users, certificates and configuring replication of the various tables, as well as gives some information about the cluster (list of nodes, data volumes, ranges and replication).

While this is a simple and straightforward setup, it means that administrative access to CockroachDB is exposed towards any client that wishes to connect to the database. This obviously cannot be limited by any firewall settings. Access is controlled by client certificates if CockroachDB is deployed in a secure mode.

In addition, an observation that we have made: if a CockroachDB cluster does not have “sufficient” nodes running it stops responding of any administrative queries and the web interface becomes unresponsive as well.

We have discussed this behaviour with Cockroach Labs and opened a ticket to track and improve the responsiveness of operational tools: https://github.com/cockroachdb/cockroach/issues/16489

The Web UI of CockroachDB gives basic monitoring capabilities, graphs can track the number of connections, latency, data traffic, etc…

In addition, monitoring via Prometheus is supported out-of-the-box: https://www.cockroachlabs.com/docs/monitor-cockroachdb-with-prometheus.html

We have yet to explore this in greater depth.

Developing applications on CockroachDB is straightforward, given its full SQL compatibility: everything works pretty much as expected. Even more advanced tools, like Liquibase are functional.

PostgreSQL client libraries can be used to communicate with CockroachDB, which is great from the perspective of getting started quickly. On the other hand, this means that the client will have little appreciation for the clustered nature of the database on the other side. For example a JDBC connection string might look like the one below to allow access for the whole cluster:

jdbc:postgresql://cockroachdb01.vpc.aws:26257,cockroachdb02.vpc.aws:26257,cockroachdb03.vpc.aws:26257/test?loadBalanceHosts=true

Notice the loadBalanceHosts parameter at the end of the connection string – this will make the driver connect to all the hosts listed in a round-robin fashion. Missing it will make your client connect to the first available host.

A better alternative to this is to use an external load balancer: Amazon’s ELB, HAProxy, or any preferred load balancing solution in a container-orchestrated environment.

We have not done very detailed performance testing, but rather wanted to explore some basic aspects of it, specifically scalability, joins and interleaved tables.

We have executed these tests on AWS on a 3 or 6-node CockroachDB cluster running on m4.xlarge machines. Although an Elastic Load Balancer was also provisioned, we have bypassed it for the load test in order to eliminate the potential of the ELB distorting the results (we did observe some increase in latency when running through the ELB).

Also please note that our test harness was running on a single c4.2xlarge machine, which may have been the limiting factor in some cases.

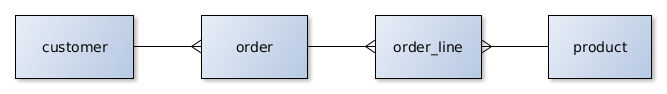

For the testing we have re-used the same datamodel that was used for the Google Spanner tests; extended by a 10-kbyte random text field on every Customer.

Our example application manages customer orders in an online store. A Customer can have many Orders which in turn contain Items (order lines) of Products.

In this case we were just reading single Customer records by primary key to establish a baseline performance. We observed roughly 13,500 transaction per second throughput with mean latency around 24ms and 99 percentile around 100ms. This is suspected to be limited by the testing infrastructure (the data volumes resulted in considerable network traffic).

We observed nearly linear scalability with these reads – on a 3-node CockroachDB cluster we measured 8,000 transactions per second with similar latencies.

Note that these numbers are comparable to what we have observed with Google Spanner.

Because ANSI SQL is fully supported in CockroachDB, it is possible to do arbitrary joins. However, we advise caution when doing so as performance can be worse than expected: implementing efficient distributed joins is non-trivial.

Cockroach Labs took a decision to start supporting functionality first and then improve on performance, so expect faster joins in subsequent releases! https://www.cockroachlabs.com/blog/better-sql-joins-in-cockroachdb/

Our join tests focused on a single use-case: to read all orders of a specific customer.

The first, naive approach is the following (this would be the first attempt in any relational database):

select

c.id, c.name, o.order_id, o.created_at

from

customer as c

inner join orders as o on c.id = o.customer_id

where

c.id = ?

This resulted in pretty poor results, single-digit transactions per second and 4-5 second average query latency.

The main reason is that CockroachDB’s query optimiser is not as sophisticated yet as it can be and currently executes a full tablescan on Orders. We would like to emphasize again that this is the current state and improvements are expected in the future.

In the meanwhile, it’s possible to get better results with slightly changing the query:

Let’s take a look using the following query:

select

c.id, c.name, o.order_id, o.created_at

from

customer as c

inner join orders as o on c.id = o.customer_id

where

c.id = ?

and o.customer_id = ?

Note that we are now explicitly specifying the customer’s id twice: once for the Customer table and once for Orders. This allows CockroachDB’s SQL engine to filter Orders more efficiently before the join is executed.

In this case we have measured 1550 queries per second with average latency of 31ms.

This is a significant improvement compared to the naive approach and the response times of the queries is now acceptable. However the throughput we can achieve is considerably worse than if we were to do single-key queries.

Please note that our schema has defined an explicit FOREIGN KEY reference between customer_id in the Orders table and id in the Customer table. This result in an automatically generated index, which is used when performing this join.

Similar to Google Spanner, CockroachDB allows explicitly interleaving tables in the database to improve join performance. Interleaved tables will be co-located with their parents on the same nodes, thus eliminating the need for a cross-node distributed join and speeding up these queries.

The syntax to create interleaved tables is straightforward, simply put INTERLEAVE IN PARENT after the CREATE TABLE statement and enumerate the key prefixes:

CREATE TABLE IF NOT EXISTS "orders" (

customer_id char(36) NOT NULL REFERENCES "customer" (id),

order_id char(36) NOT NULL,

created_at timestamp NOT NULL,

PRIMARY KEY(customer_id, order_id)

) INTERLEAVE IN PARENT customer (customer_id);

According to our tests, interleaving the tables and using the optimised query to execute the join indeed helps: we have observed 2270 queries per second, with an average latency of 20ms (our 99percentile was around 100ms).

An interesting observation that we made during our testing was uneven load on the CockroachDB cluster. This was caused by our relative low volume of data (while we had a reasonable amount of keys; our records are quite small) in combination with the way CockroachDB nodes assume ownership of data ranges.

Specifying explicitly smaller range split sizes (range_max_bytes) allowed CockroachDB to split the load more evenly and this helped in our observed cluster hot-spots.

To summarise, our impressions with CockroachDB are very good overall. It’s very easy to get started with and you get a fully distributed ANSI SQL database. Even better, it was clearly designed to work well in a container and scheduler setup – it looks much friendlier for Kubernetes deployments than many other database that we have encountered with.

It has perhaps the most sophisticated control over replica placement that we have seen in databases so far – the tagging system is extremely flexible and allows for very fine-grained control. The only drawback is that expressing all that via command-line arguments may be cumbersome and difficult to maintain.

Also, CockroachDB is still a very young product and expected to evolve a lot in the next few years. This mostly shows in the relative underdevelopment of the tools supplied and some aspects of its performance. It is very much usable in its current state, but not necessarily as mature as other products.

Be aware of join performance: while the combination of interleaved tables and carefully written queries will give you decent results, but, because of the distributed nature of the database you still have to pay more attention to it than what was usual for a traditional RDBMS.

We would also like to thank the Cockroach Labs team who have helped us a lot in our exploration of CockroachDB. The team was always very responsive and gave us helpful advices.

We would definitely recommend taking a closer look at CockroachDB in case you:

Migrating from any existing relational database (especially PostgreSQL) should be a relatively simple exercise – your client code and tools should just work out of the box. However in this case, we recommend to take some caution regarding join queries and transactional semantics.

If you have a need for more complex, ad-hoc queries, you should evaluate CockroachDB’s performance specifically for your use case. Denormalising the data should also be considered: it’s a common technique for other NoSQL databases and would help to allow CockroachDB perform certain queries faster. Also, manual optimisation and using interleaved tables can help a lot to make them run faster.

Deploying to Kubernetes looks like a really good use-case: it is a good complementer technology as you get process-level health check and load-balancing out of the box. This alleviates a lot of concerns regarding the cluster becoming unresponsive and having to deal with cluster-wide access yourself via external load balancers.

To summarise – we like CockroachDB a lot. It’s filling an important gap and offering the transactionality and richness of a SQL database while also providing the scale-out, always-on, multi-active nature of many NoSQL databases.

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.

GOTO Copenhagen 2023 – The 12 Factor App For Data (Recording)

Watch the recording of our Technical Delivery Director, James Bowkett from the GOTO Copenhagen 2023 conference for his talk ‘The 12 Factor App For Data’