February 4, 2013 | Software Consultancy

This API will in future be used by a mobile client and by third parties, making it important to verify that it is functionally correct as well as clearly documented.

An additional requirement in our case is for the tests to form a specification for the API to allow front and back end developers agree on the format in advance. This is something that BDD excels at, making it natural to continue to use Cucumber. This post will focus on the difficulties of attaining the appropriate level of abstraction with Cucumber while retaining the technical detail required for specification.

This API will in future be used by a mobile client and by third parties, making it important to verify that it is functionally correct as well as clearly documented.

An additional requirement in our case is for the tests to form a specification for the API to allow front and back end developers agree on the format in advance. This is something that BDD excels at, making it natural to continue to use Cucumber. This post will focus on the difficulties of attaining the appropriate level of abstraction with Cucumber while retaining the technical detail required for specification.

The first thing to identify is a tool to communicate with the API. In our case a brief investigation yielded the Apache HTTPClient which allows full interaction with our REST API using JSON. Having chosen the tool, the next step is to start to build up our stories and scenarios. As with any other BDD project, this is simple to do yet difficult to do well. Creating scenarios that effectively describe the behaviour of the system while being maintainable when scaling up is something that experience has shown is often grossly underrated.

With UI acceptance testing, the main goal is to abstract as much detail out of the test itself as possible without losing the descriptiveness of the test. Logic would suggest that the same approach would apply to API testing. A scenario would therefore consist of a simple set of human readable sentences which describe expected behaviour, with some examples of input and output where appropriate.

An excellent example of applying this approach can be found on Sampo Niskanen’s blog here. In his article he shows the process of removing technical detail from scenarios to leave only a description of intent. The tests he produces as a result are suitable for any audience and make test failures easily understood. Unfortunately, the resulting tests lack the detail required for the scenarios to act as a specification for communication between technical stakeholders – in our case the back end developers and front end team who consume the API.

A contrasting approach can be found in The Cucumber Book, where this exact point is addressed. They mention one of the underlying concepts of BDD – that the scenario should always be readable by the stakeholder – and go on to point out that in this situation, the stakeholder is assumed to be technical and therefore able to deal with more detail.

The example scenarios therefore contain details of the http methods used and expected JSON responses. Taking the same example of an API that returns a list of fruit, we end up with two different scenarios expressing the same behaviour.

Non technical specification:

Given the system knows about the following fruit:

| name | color | | banana | yellow | | strawberry | red | When the client requests a list of fruits Then the response should contain the following fruit: | name | color | | banana | yellow | | strawberry | red |

Technical specification:

Scenario: List fruit

Given the system knows about the following fruit:

| name | color |

| banana | yellow |

| strawberry | red |

When the client requests GET /fruits

Then the response should be JSON:

"""

[

{"name": "banana", "color": "yellow"},

{"name": "strawberry", "color": "red"}

]

"""

While the differences in a simple example are not vast, it is easy to see that more complex scenarios would differ significantly, most notably in the size of the JSON response included in the technical specification.

As already mentioned, the simplified scenarios do not offer sufficient detail for our technical stakeholders, which would make the more technical approach seem best, especially as it is endorsed in The Cucumber Book. Unfortunately, I don’t believe that this approach scales beyond the examples and would result in a test suite that would be very difficult to maintain.

From our point of view, including expected JSON in the scenario has several problems:

The API will potentially be returning large amounts of JSON on more complex scenarios, making it difficult to understand the test at a glance.

There will often be multiple tests for a single call to test validations and different situations. The repetition of response JSON coupled with the size mentioned above would make the tests very daunting to read, reducing the likelihood of stakeholders wanting to use them as a primary resource. Maintaining the tests in the case of changes to specification would also become a larger job.

API responses often contain information that we don’t know at design time which will still need to be verified. With the more descriptive and less technical approach, this is easily dealt with by describing the expected result. Covering this when specifying the expected response is almost impossible.

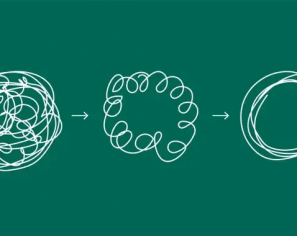

In our case, it is clear that some middle ground needs to be achieved.

Considering the above points, we have attempted to use aspects of both approaches to meet our requirements.

To extend the examples to cover some of the increased complexity mentioned in point 3 above let’s assume that the API response to GET /fruits includes a UUID for each fruit, generated by the system when an item is added. This value is impossible for us to know when writing the test, though it will be part of the response received when adding fruit during test setup.

Firstly, we want to make the scenario itself clear and readable for API documentation and testing.

Scenario: List fruit

Given the system knows about the following fruit:

| name | color | | banana | yellow | | strawberry | red | When the client requests GET /fruits Then the response should contain the following fruit: | name | color | | banana | yellow | | strawberry | red |

This looks basically the same as the simple example, with the slight difference being that we have included the exact method call in the scenario. With a well named API, the nature of the call should always be clear without the need to abstract. To meet the rest of our requirement, we are including the expected format of the response information in the background of the story.

Background:

Given that fruits are returned in the following format:

"""

{"uuid": "<fruit_uuid>",

"name": "<fruit_name>",

"color": "<fruit_colour>"}

"""

This means that our scenario now includes the information required by the technical users, while keeping the scenarios themselves clear to all stakeholders.When running the tests, we store the list of known fruits and their associated uuids within the test framework, and retrieve that information when asserting that the response contains our expected results.

A similar approach is also used when a request includes some JSON.We also verify the format of the response by replacing the keywords in the JSON format background step with our information for each fruit and verifying that the resulting JSON snippet is present in the response.The only weakness of this approach is that we don’t include the entirety of the JSON response anywhere in the test, meaning that it is not 100% complete from the specification point of view.

As an additional step to assist the debugging of problems, we also keep a log of requests and responses which is printed to the Cucumber report on test failure.

Our approach is still in the early stages and will undoubtedly evolve as we address new requirements, but we feel this is a solid compromise for making our tests useful for all stakeholders, technical or otherwise.

We’d love to hear about how other teams have tackled API testing with Cucumber or any other BDD tool. Please address any comments to the author at tristan.mccarthy@opencredo.com.

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.

Agile India 2022 – Systems Thinking for Happy Staff and Elated Customers

Watch Simon Copsey’s talk from the Agile India Conference on “Systems Thinking for Happy Staff and Elated Customers.”

Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup

Watch Simon Copsey’s talk from the Lean-Agile Delivery & Coaching Network and Digital Transformation Meetup on “Seeing Clearly in Complexity” where he explores the Current…

When Your Product Teams Should Aim to be Inefficient – Part 2

Many businesses advocate for efficiency, but this is not always the right goal. In part one of this article, we explored how product teams can…